Phew! It’s been a fun couple of days. I’m going to provide a day-by-day summary of what’s been going on.

Tuesday

More or less the entire day was take up by travel. The flight was mostly uneventful, with a 1 hour layover in Charlotte, NC. The one hour was long enough to get from one terminal to the other and get food, yet short enough that by the time I was done eating, the first class passengers were about to board. For virtually the entire duration of the flight, I was preoccupied with a book I took with me.

Wednesday

Waking up for a 9am event was never easier! I guess it was the timezone difference. Instead of going to bed at about 3am, I went to bed at around midnight (which happens to be 3am on the other coast!).

I woke up at about 6:30 - not due the alarm. I went back to sleep. At 7:30, I woke up for real. Before long, it was 8:30, and I was already at the conference about to get my proceedings and badge. Free food, namely orange juice and crossionts followed.

Nine o’clock rolled around, and the conference began. The keynote was ok. I wasn’t amazed by it, even though the speaker had valid points.

At 11, the first session began - virtualization. This was one of the sessions I’ve been looking forward to. Well, I was interested in one paper specifically: vNUMA: A Virtual Shared-Memory Multiprocessor. The name summarizes the idea very nicely. Why was I interested in it? Well, a long time ago, when you couldn’t walk down the street and buy a multi-core system, the year was 2003 - I had the same idea. It’s a rather obvious idea, as is the name. At first, I was going to hack the Linux kernel to accomplish it (I still have a couple of patches I started), but other things sapped up my time. (The fact that everyone I mentioned it to told me that the paging over ethernet overheads were going to make it impractical made me want to do it even more!) As it turns out, the folks from University of New South Wales, that got a publication out of it, started working on it in 2002.

Their implementation is for the Itanium architecture. They said that they chose it because at the time they started, Intel was pushing Itanium as the architecture that’ll replace x86. Unfortunately, I didn’t get to talk to any of them at the conference.

The lunchtime invited talk was nice. It was about how faculty at Harvard tried to make the intro to computer science course fun and appealing, yet still retain the same important intro material. The big thing: they used Amazon’s Elastic Compute Cloud (EC2) instead of the on-campus computer network. They liked being in charge of the system (not having to wait for some admin to take care of a request for some change), but at the same time they didn’t like being the admins themselves. Some of the students were also not exactly “thrilled” about hearing about the cloud so often - especially since they didn’t have to use it in a cloud-way. One of the most memorable parts of the talk was when the speaker whipped out a phone book, asked the audience about how to find something in it, and shortly after proceeded to do binary search - ripping the book in half, then tossing a half across the podium, and then ripping the remainder in half.

Afterward, the networking session took place. The one talk that was fun was StrobeLight: Lightweight Availability Mapping and Anomaly Detection. The summary of the idea: ping the subnet, then some time later, ping it again, and count the number of IPs that changed - essentially XOR and then count ones. It’s a simple idea that apparently works rather well.

The next session was about storage. It started with a paper that got a best paper award. One of the authors is Swami - a former FSL member, not at University of Wisconsin-Madison. While he never said it during the talk, they essentially implemented a stackable filesystem. Right after it was a talk by guys from the MIT AI lab, about decentralized deduplication in SAN cluster filesystems.

After a short break, the last invited talk for the day happened. A dude from Sun talked about SunSPOTs. SunSPOT is a small wireless sensor/actuator platform that’s based on Java. It reminded me of several other such platforms, but seemed a lot more polished. Unfortunately, it’s Java-centric way was rather disappointing. (More or less everyone that knows me, knows that I don’t like Java.)

The day concluded with a poster session & food.

Thursday

Again, waking up wasn’t a problem. The breakfast happened to be bagels.

9am: the first talk of the distributed systems session, was Object Storage on CRAQ: High-Throughput Chain Replication for Read-Mostly Workloads. This is one of the papers I’m intending to look at. The other two I found less interesting.

11am: the kernel development session had 2 interesting talks. The first, Decaf: Moving Device Drivers to a Modern Language talked about taking device drivers, splitting them into two portions (a performance critical section in C, and a non-performance critical section that could be moved to “a modern language” - read: Java). While I strongly disagree with the language choice, the overall idea is interesting. Java (along with other more managed languages) provides stronger static checking than C. They actually took some Linux drivers, and split them up. I want to go over their evaluation again. The next interesting talk was about executing filesystems in a userspace framework (Rump File System: Kernel Code Reborn). Unlike FUSE and other attempts, this one aims to take in-kernel filesystem code, and execute it in userspace without any modifications.

The lunchtime invited talk about about how teaching students how to program is hard. How some error messages the compiler outputs are completely misleading and confuse students.

I zoned out for most of the 2pm session about automated management. There were emails & other things to catch up on.

The short paper session started off nicely with The Restoration of Early UNIX Artifacts. The speaker mentioned that he managed to get his hands on a copy of first edition UNIX kernel source. While it was slightly mangled up, he managed to get it up and running. After a bit of effort, he got his hands on some near-second edition UNIX userspace. Another short paper was about how Linux kernel developers respond to static analysis bug reports.

The last invited talk for the day was unusual. It was about the  Antikythera mechanism. He used Squeak EToys (a Smalltalk environment) to simulate the mechanism. He put the “software” up on the web: http://www.spinellis.gr/sw/ameso/

Antikythera mechanism. He used Squeak EToys (a Smalltalk environment) to simulate the mechanism. He put the “software” up on the web: http://www.spinellis.gr/sw/ameso/

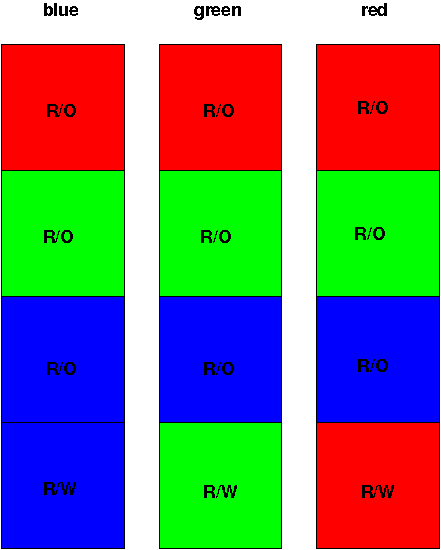

Afterwards, more food. This time without posters. And after the food, there were some BOFs. The one I went to was about ancient UNIX artifacts. There I got to see first edition UNIX running. Really neat. It felt like UNIX - same but different in some ways. The prompt was ’@’; the ’cd’ command didn’t exist, instead you had ’chdir’… that’s right, the “tersness” of UNIX wasn’t always there!; the ’rwx’ bits you see when you do ls -l were different, you had one ’x’ bit, and 2 rw pairs. On my way out, I got more or less dragged into a mobile cluster BOF (or whatever the title was), the most interesting part was when we got to talk about Plan 9 all the way at the end.

David Brin

David Brin