Memory Leaks

Alright, I think I’ve had just about enough. Why does Amarok eat up 22% of my RAM (1GB) after 4 days of running (and playing music for maybe 18 hours of those 4 days)? Why does Firefox use up 33% of my RAM in 4 days?

Why is it that when I shut down the app, and restart it, the usage is 4–5 times less?

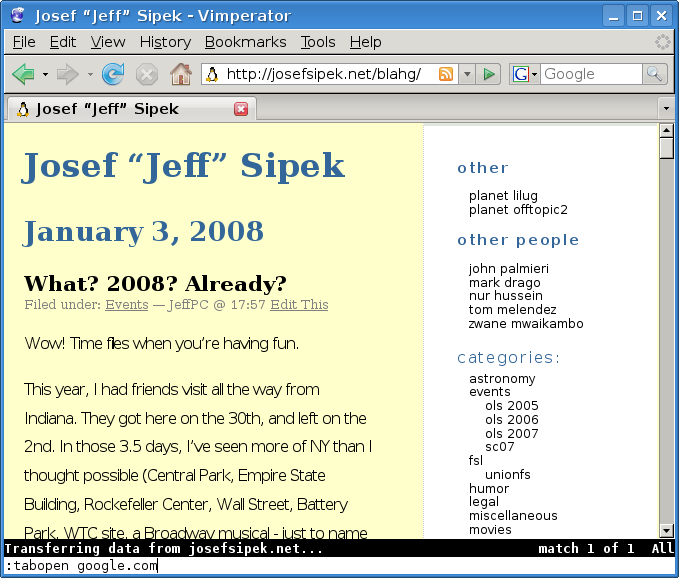

| Amarok | Firefox 2 | |

| before app restart | 225 MB | 338 MB |

| after app restart | 58 MB | 72 MB |

The only reason I can think of is application being buggy, or having really crappy defaults.

Buggy Applications

Dear developers, believe it or not, when you allocate memory, you also have to free it when you are done with it. If you don’t, you are committing a crime against humanity known as a “memory leak”. This memory is unusable, and essentially becomes dead weight the process carries around. Since it is not used, the OS may swap it out, and before long, your swap file/partition becomes full of memory that has been leaked.

Contrary to popular belief, freeing memory is really simple.

For you C++ coders (yes, that includes you Amarok folks), you simply use the delete keyword followed by a pointer of what you want to free. For example,

delete some_pointer;

If you are using C, the free function is your friend. Just call it, and make the one argument you give it the pointer to what you want to free. For example,

free(some_pointer);

Now, if you are working on a larger project, there might be wrappers around the memory management (malloc/free, new/delete) functions, but whatever the “free this memory” function is called, USE IT.

I can almost hear all the managed languages fans yell: “Just use a language that does garbage collection, and you won’t have to worry about freeing memory.” Well, you are WRONG!

Garbage collectors maintain graphs of memory allocations, and whenever they notice that some piece of memory is unreachable, they mark it as garbage, and free it. Here’s my favorite example for causing leaks in a garbage collected language:

Suppose that you have implemented a class that works as a stack. You implemented it as a list of elements, and an index into the array to mark the top of the stack. Pushing an element is trivial, you just increment the index, and set the reference in the array to the object you want to store. Popping is really easy, you just decrement the index, and you’re done. Right? WRONG! Decrementing the index changes that one integer variable, but that reference in the array is still valid, and therefore the object is still reachable as far as the garbage collector is concerned. Sure, next time you push into that slot, the previous reference will get broken, and the previous allocation will get freed (assuming that there are no other references). But what if you never push that many elements back onto the stack? What if you experienced some high-load spike? You’ll have a large number of objects incorrectly referenced, tieing up memory, and quite possibly making the entire system slower.

How can you solve this? Pretty simple, just reset the reference to some “null” quantity. In Java, that means using the null literal. For example,

some_reference = null;

In Python, None is the proper keyword to use:

some_reference = None

The lesson is, free the memory you allocated when you are done using it.

Crappy Defaults

Many large applications (Firefox included), have many options you can set that affect its behavior. The default options should cover 95% or more of the users (or at least the greatest majority possible). Why such a high number? Well, suppose you settle for making 90% of your users happy out of the box…that means that 1 in 10 people that try your app will not be happy with the defaults. How many will bother checking if there even are knobs they can turn to make it work the way they want? Not all. Some will just try to install another open source app written by someone else that does pretty much the same thing. So, the default options should make as many people happy as possible.

How does this tie into a third of my RAM being used by Firefox? Simple, I do not know if there are any knobs that would “fix” the problem I am seeing. For all I know, someone decided that it was a great idea to be really aggressive about caching web page content in memory — something that’s fine if you have 16GB RAM, but guess what most people don’t.

Whatever it is (defaults that don’t make sense or memory leaks), Firefox and Amarok have problems that must get addressed. What is one of the reasons people complain about Microsoft Word? It takes up tons of memory. Well, I don’t feel like throwing over 200 MB of RAM at an application that plays MP3s, displays a playlist, and cover art.

And before someone suggests that I use Firefox 3… I realize that it is all super-duper-better-than-ever, but let’s think for a second. When the original Firefox was released, it was hailed as the non-leaky, light-weight Mozilla. Then, things started to get slow again. Firefox 2 was supposed to be the super-fast, non-leaky browser. What happened? What happened to my >300 MB of RAM? Now, Firefox 3 is all the rage…do you see the pattern yet?

I think this brings up a larger issue. It’s no secret that I do some Linux kernel coding from time to time. In the kernel, there are leaks at times, but it seems that the kernel leaks are effectively non-existent compared to applications like Firefox. Don’t believe me? How come you can have a server run for over a year and it responds just as well after the year as it did when you booted it? Imagine running Firefox for a year without restarting it? Can you even imagine that? The Linux kernel doesn’t seem to be the only “non-leaky” (there are leaks, but they are very rare, and probably mostly in the ugliest parts of the kernel — device drivers), Apache performs quite well even after running for a while, PostgreSQL, and the list goes on and on.

Why is it that Firefox and other projects seem to have so many problems? The only thing I can think of is the quality control that goes into checking new code before it’s committed. In the kernel community, a patch may get rewritten a dozen times, submitted to mailing lists for review, get comments from people familiar with the subsystem, but also from other developers (and budding developers trying to understand the existing code). It takes a lot of effort to get a piece of code into the kernel, but in the end, that code is well written, well reviewed, and it should benefit most users. Do the Firefox, et. al., communities do this? I do not know, but somehow, I suspect that it isn’t the case.