It’s been a while since I started telling people that benchmarking systems is hard. I’m here today because of an article about an article about an article from the ACM Transactions on Storage. (If anyone refers to this post, they should cite it as “blog post about an article about…” ;) .)

While the statement “benchmarking systems is hard” is true for most of systems benchmarking (yes, that’s an assertion without supporting data, but this is a blog and so I can state these opinions left and right!), the underlying article (henceforth the article) is about filesystem and storage benchmarks specifically.

For those of you who are getting the TL;DR feeling already, here’s a quick summary:

- FS benchmarking is hard to get right.

- Many commonly accepted fs benchmarks are wrong.

- Many people misconfigure benchmarks yielding useless data.

- Many people don’t specify their experimental setup properly.

Hrm, I think I just summarized a 56 page journal article in 4-bullet points. I wonder what the authors will have to say about this :)

On a related note, it really bothers me when regular people attempt to “figure out” which filesystem is the best, and they share their findings. It’s the sharing part. Why? Because they are uniformly bad.

Here’s an example of a benchmark gone wrong…

Take Postmark. It’s a rather simple benchmark that simulates the IO workload of an email server. Or does it? What do mail servers do? They read. They write. But above all, they try to ensure that the data actually hit the disk. POSIX specifies a wonderful way to ensure data hits the disk - fsync(2). (You may remember fsync from O_PONIES & Other Assorted Wishes.) So, a typical email server will append a new email to the mail box, and then fsync it. Only then it’ll acknowledge the receiving the email to the remote host. How often does Postmark run fsync? The answer is simple: never.

Now you may be thinking…I’ve never heard of Postmark, so who uses it? Well, according to the article (the 56-pages long one), out of the 107 papers surveyed, 30 used Postmark. Postmark is so easy to run, that even non-experts try to use it. (The people at Phoronix constantly try to pretend that they figured out benchmarking. For example, on EXT4, Btrfs, NILFS2 Performance Benchmarks they are shocked (see page 2) that some filesystems take 500 times longer for one of their silly tests, even though people have pointed out to them what barriers are, and that they will have an impact on performance.)

Granted, non-experts are expected to make mistakes, but you’d expect that people at Sun would know better. Right? Well, they don’t. In their SOLARIS ZFS AND MICROSOFT SERVER 2003 NTFS FILE SYSTEM PERFORMANCE WHITE PAPER (emphasis added by me):

This white paper explores the performance characteristics and differences of Solaris ZFS and the Microsoft Windows Server 2003 NTFS file system through a series of publicly available benchmarks, including BenchW, Postmark, and others.

Sad. Perhaps ZFS isn’t as good as people make it out to be! ;)

Alright, fine Postmark doesn’t fsync but it should be otherwise ok, right? Wrong again! Take the default parameters (table taken from the article):

| Parameter | Default Value | Number Disclosed (out of 30) |

| File sizes | 500-10,000 bytes | 21 |

| Number of files | 500 | 28 |

| Number of transactions | 500 | 25 |

| Number of subdirectories | 0 | 11 |

| Read/write block size | 512 bytes | 7 |

| Operation ratios | equal | 16 |

| Buffered I/O | yes | 6 |

| Postmark version | - | 7 |

First of all, note that some parameters weren’t specified by a large number of papers. The other interesting thing is the default configuration. Suppose that all 500 files will grow to 10000 bytes (they’ll have random sizes in the specified range). That means that the maximum size they’ll take up is 5000000 bytes, or under 5 MB. Since there’s no fsync, chances are that the data will never hit the disk! This easily explains why the default configuration executes in a fraction of a second. These defaults were reasonable many years ago, but not today. As the article points out:

Having outdated default parameters creates two problems. First, there is no standard configuration, and since different workloads exercise the system differently, the results across research papers are not comparable. Second, not all research papers precisely describe the parameters used, and so results are not reproducible.

Later on in the 3 pages dedicated to Postmark, it states:

An essential feature for a benchmark is accurate timing. Postmark uses the time(2) system call internally, which has a granularity of one sec. There are better timing functions available (e.g., gettimeofday) that have much finer granularity and therefore provide more meaningful and accurate results.

Anyway, now that we have beaten up Postmark and it is cowering in the corner of the room, let’s take a look at another favorite benchmark people like to use — a compile benchmark.

The great thing about compile benchmarks is that they are really easy to set up. Chances are that someone interested in running benchmarks already has some toolchain set up - so a compile benchmark consists of timing the compile! Easy? Definitely.

One problem with compile benchmarks is that they depend on a whole lot of state. They depend on the hardware configuration, software configuration (do you have libfoo 2.5 or libfoo 2.6 installed?), as well as the version of the toolchain (gcc 2.95? 2.96? 3.0? 3.4? 4.0? 4.2? or is it LLVM? or MSVC? or some other compiler? what about the linker?).

The other problem with them is…well, they are CPU bound. So why are they used for filesystem benchmarks? My argument is that it is useful to demonstrate that the change the researchers did does not incur a significant amount of CPU overhead.

Anyway, I think I’ll stop ranting now. I hope you learned something! You should go read at least the Linux Magazine or Byte and Switch article. They’re good reading. If you are brave enough, feel free to dive into the 56-pages of text. All of these will be less rant-y than this post. Class dismissed!

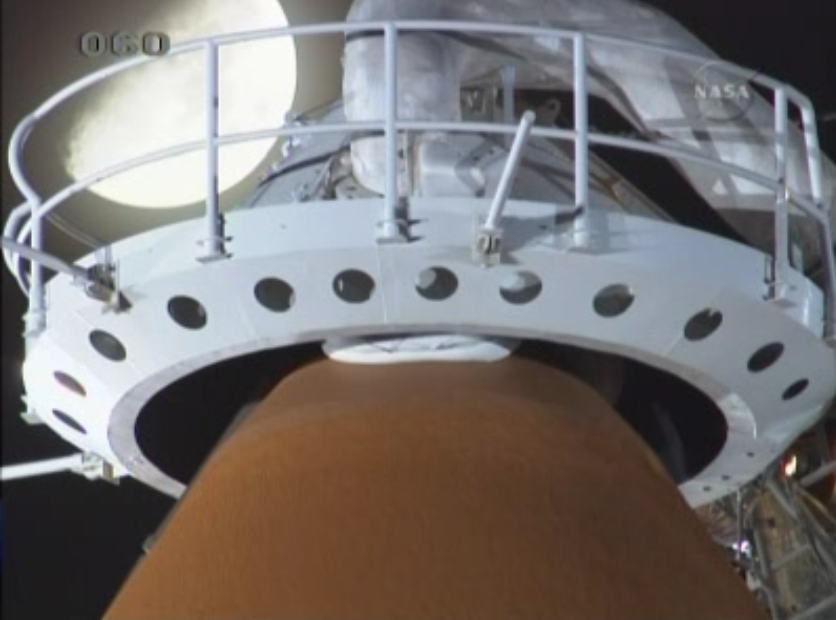

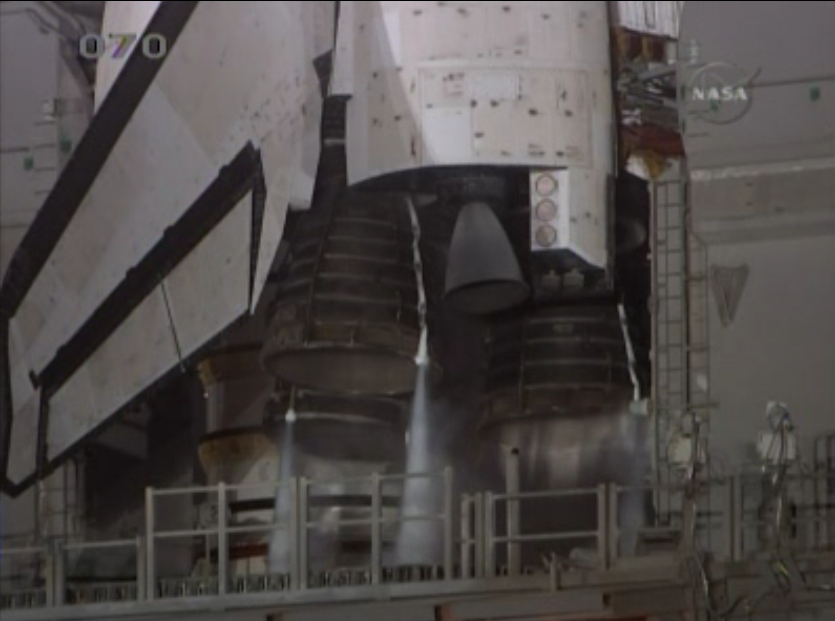

Apollo Guidance Computer (AGC).

Apollo Guidance Computer (AGC).